Evidently we haven't been reading the same reviews. Sure, we can compare apples and oranges all day but when you say "faster" that assumes many things. I don't even think I could do the workload with your i7-13650HX that I can do with my desktop. I'd likely bog it down as it wouldn't even have the system memory to handle some of the stuff I do. But again, this is about user preference and using the right tool for the right job.

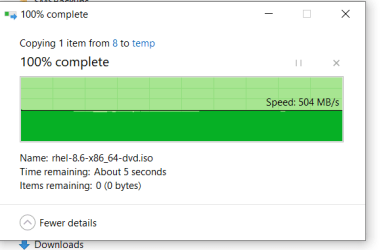

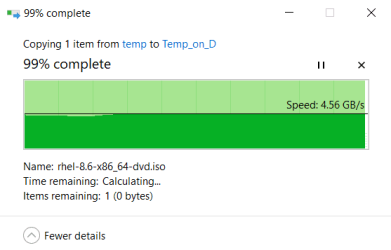

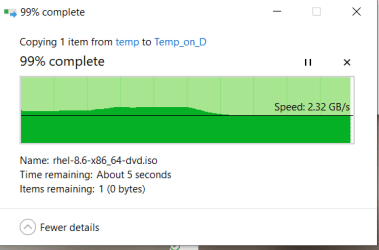

To anyone who might still be reading this particular part of the discussion, there's no way of guessing how much RAM you might need if you don't give us any details whatsoever about what it is that you mean when you say things like "some of the stuff I do". I use a Ramdisk all the time, and was using it all the time even before I had 16GB of RAM, which I'm sure everyone can agree still isn't that much RAM either. A lot also depends on what data needs to be put on the Ramdisk versus not, and

when. The i7-13650HX does have substantially more CPU processing power on tap than the i9-10900X, and actually even beats the OP's i5-12600KF (non overclocked) in real-world tasks by some margin, and does so with not very many exceptions. I know that it's only a synthetic benchmark, but the i7-13650HX scores about 21,000 in multi-threaded Cinebench R23 so, the difference is quite big enough to conclude that it's going to be a faster CPU than the i5-12600KF, and, the Passmark score also confirms this conclusion.

So then, does this mean that I have a HELT (high-end laptop) CPU? In addition, the mobile variant of the GeForce RTX 4060 is not too far behind the desktop (also non overclocked) variant of it BTW. I apologize for spoiling all the fun... which I did on purpose. But I still want my extra brownie points! lol

The i9 10900X is a 7th. gen refresh so it's definitely NOT bleeding edge.

It is what can be best described as a remnant of yore. Aged like fine milk.

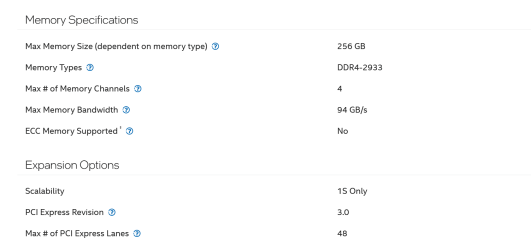

Not even close. I was comparing my practically vintage work station to this and comparing it to a 20 lane chip.

28

If Intel says it's 20 lanes then it's likely 20 lanes no matter how much anyone wants to trump it up to make it something else. I have PCIe 4.0 on another build and frankly, I'm really not all that impressed. The theoretical argument is dubious at best when applied to real time working environments. You likely know this already. So 20 lanes. Fancy lanes, sure. Still, 20 lanes.

The 8x DMI 4.0 lanes are pink elephants offering an additional equivalent of 8 lanes of PCIe 4.0 bandwidth. It uses the Z790 chipset to make that extra bandwidth available (with

gremlins), which boils down to the fact that it has to share this extra bandwidth with all the other I/O features (SATA/USB/network/SST/...) that have been implemented through the PCH. Depending what are the hardware limitations of the Z790 motherboard used, and also depending what PCIe expansion cards are installed on it (and how much of the extra bandwidth will be shared with the other I/O features) it can be possible to achieve, e.g., 2x additional NVMe 4.0 x4 with only some minimum losses.

In short, even with some data being transferred e.g. through USB and/or via the network, it's not very often going to hinder the SSD performance in any way that can be noticed in the real world typically, as data transfers usually occur in bursts. This also is part why servers and heavy workstations don't necessarily always use 4 lanes for each NVMe SSD, as they often can get away with using only 2 lanes per M.2 NVMe slot (on some of the slots at least). Another important reason why that is (among various other criteria) is that server or server-like systems often tend to rely more heavily on the benefits of SLC NAND to sustain and/or to uphold those specific performance factors that relate to SSD storage.

When I first built my work station with my meagre 128 GB of RAM I was hit by the same old worn out questions you're asking me. I have never regretted getting this much RAM and yes, many times I've put it to good use. In fact, I'm convinced that 256 GB wouldn't hurt at all if I could run it but this platform can't so I just have to be happy with what I have. Yes, I'm going to use that much RAM and I have already and I likely will again.

I've been told to get a Xeon for the kind of things I do but I also do a lot of standard stuff so an i9 or an i7 suits me better. To approach this with a broad, brushstroke statement that one platform is "simply better" than another is rather over-simplistic IMO. Better in what way? Better for whom? There is no platform that is better over all for everyone.

Again, as long as you tell us nothing about what you use it for (and how you use it) besides hollow statements like "many times I've put it to good use", you still definately aren't making any real sense. Just to put a few things back into perspective, even though it certainly is true that synthetic benchmarks aren't a fully accurate representation of performance in the real world, the Passmark (CPU Mark) score of the i7-13700K is more than

double that of the i9-10900X, and, although prices always can vary of course, even the "expensive" i9-13900K still scores a whopping

2.8 times better value (CPU Mark divided by $Price) than this same i9-10900X.

Anyway, I stated beforehand that this chip was tempting for me and now I just discovered that I can get it for around $300 US if I look in the right places. That makes it even more tempting. Alas, the prospect of getting a new system board and all the stuff that goes with it is not so tempting. So I'll settle for what I have even if it isn't Windows 11 compliant like the i9 10900X.

That was my whole point to begin with. We live in a hyper-capitalist world. The pink elephants always win. The snakes are hissing at the nude customer's crown.

www.intel.com